Exploring university–community partnerships

Participatory geospatial technologies have the potential to support and promote citizen engagement. This great promise has led to more collaborations between academics and community partners interested in pursuing this aim. In their recently published paper, “A web of expectations: Evolving relationships in community participatory geoweb projects,” four Geothink researchers and their colleagues cast a reflective eye on the participatory action research processes behind three completed geoweb partnership projects.

Co-author Jon Corbett, an associate professor in Community, Culture and Global Studies at the University of British Columbia’s Okanagan campus, sees their ACME journal article as helping to fill a gap in the geoweb literature. “For me, one of the things I’m most interested in is how—in a truthful and well-positioned way—we can talk about the veracity of the work that we’ve done in regards to its ability to actually bring about impact and social change,” Corbett said.

In the article, the authors compare the different cases in order to consider some of the tangible, empirical challenges that the projects encountered, concentrating on the frictions that can occur where technical and social considerations intersect.

Participatory geoweb initiatives commonly rely on out-of-the-box mapping tools. For these three projects, a central aim was to employ the expertise of the university researchers to co-develop and co-evaluate custom geospatial web tools that could address community partners’ objectives. Ideally, such collaborations can benefit all parties. Researchers can learn about the potential and the limitations of the geoweb as a tool for civic engagement while partners have the opportunity to reflect on their objectives and access a wider tool set for accomplishing them. In reality, collaborations require compromises and negotiations. The question then becomes: when are researchers’ academic objectives and partners’ community objectives truly complementary?

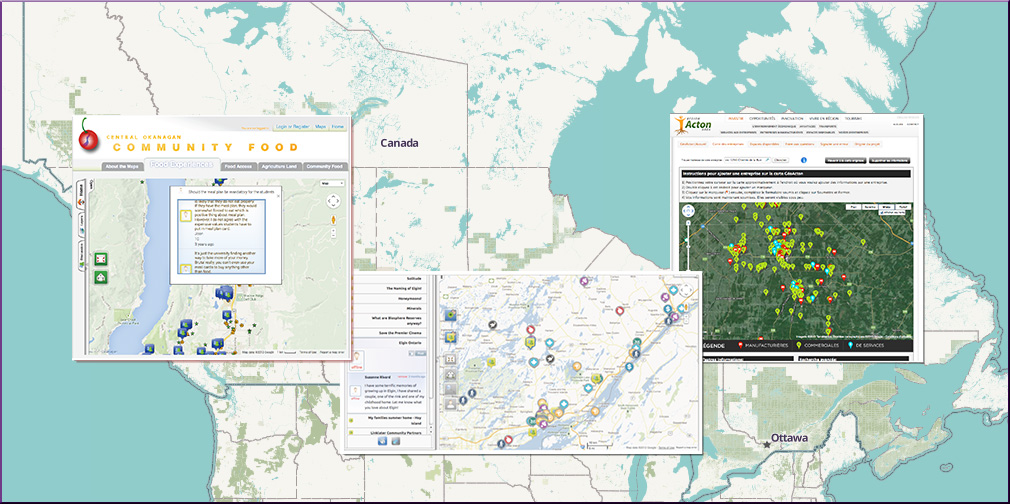

In the first case study, the geoweb was used to create a participatory business promotion website for a rural Quebec community, intended as one component of a larger regional economic development strategy. The second case was a collaboration between two university partners and a cultural heritage organization in Ontario. The partners hoped the customized online tool could “serve as a ‘living’ repository of cultural heritage information that was both accessible to the public and could facilitate the contribution of knowledge from the public.” In the third project, university researchers worked with government and grassroots organizations at local as well as provincial levels. The vision in this case was to enable non-expert community members in the Okanagan region to share their own knowledge and experiences about local food and its availability.

Corbett explained that in reflecting on their work, the researchers realized that as social scientists with very specific domains of expertise in political science, geographic information systems, and community research, “the types of skills we needed to negotiate the relationships were far different from the sorts of traditional disciplinary fields that we work in.” Their collaborators tended to identify the academics more as technical consultants than scholars. As the authors write, “most academics remain untrained in software development, design, marketing, long-term application management and updating, legal related issues, [and] terms of service.”

Although the three collaborations were quite different in terms of the publics involved as well as the negotiated objectives of the projects and the tools employed to achieve them, the authors identified several key common themes. The authors note, “In all three case studies, we found that the process of technology development had substantial influence on the relationship between university developers and community organization partners. This influence was seen in the initial expectations of community partners, differential in power between researcher and community, sustainability of tools and collaborations, and the change from research collaboration towards ‘deal making.'”

In the end, Corbett said, “All of the projects were extremely precarious in how we could assign value or success to them. The paper was really an academic reflection on the outcomes of those three different projects.”

Abstract

New forms of participatory online geospatial technology have the potential to support citizen engagement in governance and community development. The mechanisms of this contribution have predominantly been cast in the literature as ‘citizens as sensors’, with individuals acting as a distributed network, feeding academics or government with data. To counter this dominant perspective, we describe our shared experiences with the development of three community-based Geospatial Web 2.0 (Geoweb) projects, where community organizations were engaged as partners, with the general aim to bring about social change in their communities through technology development and implementation. Developing Geoweb tools with community organizations was a process that saw significant evolution of project expectations and relationships. As Geoweb tool development encountered the realities of technological development and implementation in a community context, this served to reduce organizational enthusiasm and support for projects as a whole. We question the power dynamics at play between university researchers and organizations, including project financing, both during development and in the long term. How researchers managed, or perpetuated, many of the popular myths of the Geoweb, namely that it is inexpensive and easy to use (thought not to build, perhaps) impacted the success of each project and the sustainability of relationships between researcher and organization. Ultimately, this research shows the continuing gap between the promise of online geospatial technology, and the realities of its implementation at the community level.

Reference: Johnson, Peter A, Jon Corbett, Christopher Gore, Pamela J Robinson, Patrick Allen, and Renee E Sieber. A web of expectations: Evolving relationships in community participatory geoweb projects. ACME: An International E-Journal for Critical Geographies, 2015, 14(3), 827-848.

If you have thoughts or questions about this article, get in touch with Naomi Bloch, Geothink’s digital journalist, at naomi.bloch2@gmail.com.