This year’s American Association of Geographer’s (AAG) Annual Meeting is in Chicago, Illinois (Photo courtesy of AAG.org).

By Drew Bush

A long line-up of Geothinkers will be presenting at this year’s Association of American Geographers (AAG) Annual Meeting in Chicago next week. You’ll definitely not want to miss four of our team members as panelists on Civic technology: governance, equity and inclusion considerations on Thursday at 8:00 AM. Other highlights include presentations by Geothink Principal Investigator Renee Sieber and our students including Cheryl Power and Tenille Brown.

Below we’ve compiled the schedule for all of the project’s team members, collaborators and students who will be presenters, panelists and chairs during the conference. Find a PDF of our guide here. We hope you find this useful for finding the right sessions to join. You can also find the full preliminary AAG program here.

If you’re not able to make the conference, you can follow along on Twitter and use our list of Twitter handles below to join the conversation with our participants.

Join the Conversation on Twitter

Alex Aylett: @openalex_ Peter Johnson: @peterajohnson

Zorica Nedovic-Budic: @TurasCities Andrea Minano: @Andrea_Minano

Tenille Brown: @TenilleEBrown Claus Rinner: @ClausRinner

Jonathan Corbett: @joncorbett Pamela Robinson: @pjrplan

Sarah Elwood: @SarahElwood1 Teresa Scassa: @teresascassa

Victoria Fast: @VVFast Renee Sieber: @RE_Sieber

Muki Haklay: @mhaklay Harrison Smith: @Ambiveillance

And remember to use the conference hashtag #AAG2015 and our hashtag #Geothink or address @geothinkca when you Tweet.

Come to our Sessions at AAG 2015

Tuesday, April 21

- 9:04 AM in Columbian, Hyatt, West Tower, Bronze Level—Alex Aylett on Models of Urban Climate Governance: A Typology and International Analysis in the 8:00 AM session entitled Pathways to decarbonisation 1: Problematising low carbon.

- 10:40 AM in Water Tower, Hyatt, West Tower, Bronze Level—Harrison Smith on “Smart cities should mean sharing cities”: Situating smart cities within the sharing economy in the 10:00 AM session entitled Critical Geographies of the Smart City 2.

- 12:40 PM in Grand Suite 5, Hyatt, East Tower, Gold Level—Michael Goodchild’s organized session on CyberGIS Symposium: Frontiers of Geographic Data Science.

- 7:18 PM in Grand C/D South, Hyatt, East Tower, Gold Level—Sarah Elwood as the speaker at the 6:30 PM Presidential Plenary Session: Radical Intra-Disciplinarity.

Wednesday, April 22

- 8:00 AM in Stetson D, Hyatt, West Tower, Purple Level— Jonathan Corbett on Plain Language Mapping: Rethinking the Participatory Geoweb to Include Users with Intellectual Disabilitiesin the 8:00 AM session entitled Looking Backwards and Forwards in Participatory GIS: Session I.

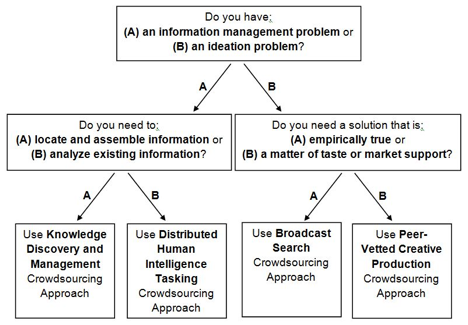

- 10:40 AM in Stetson D, Hyatt, West Tower, Purple Level—Renee Sieber on Frictionless Civic Participation and the Geospatial Web in the 10:00 AM session entitled Looking Backwards and Forwards in Participatory GIS: Session II.

- 11:20 AM in Lucerne 2, Swissôtel, Lucerne Level—Michael Goodchild as a discussant in the session entitled Spatiotemporal Symposium: Emerging Topics in Data-driven Geography (2).

- 1:40 PM in Stetson D, Hyatt, West Tower, Purple Level—Pamela Robinson on Civic Hackathons: New Terrain for Citizen-Local Government Interaction? in the 1:20 PM session entitled Looking Backwards and Forwards in Participatory GIS: Session III.

- 3:20 PM in Stetson D, Hyatt, West Tower, Purple Level—Muki Haklay as a panelist on Looking Backwards and Forwards in Participatory GIS: Session II.

Thursday, April 23

- 8:00 AM Columbus EF, Hyatt, East Tower, Gold Level—Pamela Robinson, Jonathan Corbett, Teresa Scassa and Peter Johnson as panelists on Civic technology: governance, equity and inclusion considerations. Pamela Robinson will serve as the chair.

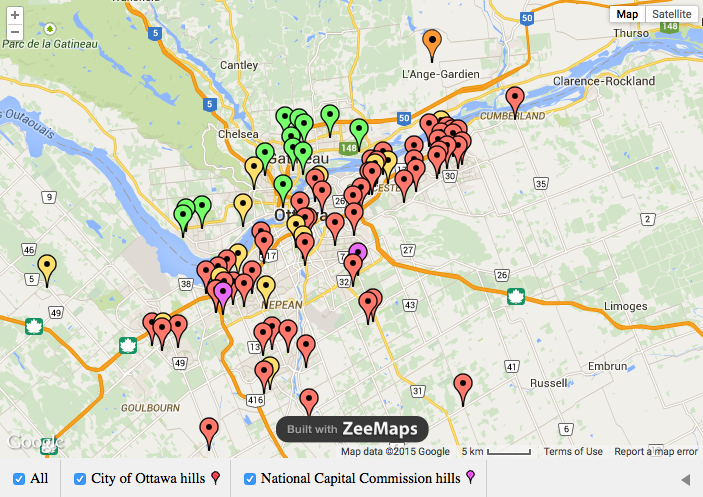

- 8:20 AM in Stetson D, Hyatt, West Tower, Purple Level—Ashley Zhang on Geosocial media as an aid to understanding place sensing and attachment in participatory planning processes in the 8:00 AM session entitled Big Data – Perils and Promises.

- 8:40 AM in Stetson F, Hyatt, West Tower, Purple Level—Tenille Brown on Law’s understanding of the virtual environment: tort liability in the geoweb in the 8:00 AM session entitled Legal Geographies 9: Human and More-Than-Human Environments, B – Viability Of The Law/Environment Project.

- 10:00 AM in Stetson D, Hyatt, West Tower, Purple Level—Andrea Minano on Geoweb Tools for Climate Change Adaptation: A Case Study in Nova Scotia’s South Shore in the 10:00 AM session entitled Citizen Science and Geoweb.

Friday, April 24

- 8:00 AM in Lucerne 1, Swissôtel, Lucerne Level—Muki Haklay’s organized session on Beyond motivation? Understanding enthusiasm in citizen science and volunteered geographic information.

- 10:00 AM in Riverside Exhibit Hall, Hyatt, East Tower, Purple Level—Claus Rinner with a poster on The Role of Maps and Composite Indices in Place-Based Decision-Making in the Geographic Information Science and Technology (GIS&T) Poster Session.

- 10:00 AM in Riverside Exhibit Hall, Hyatt, East Tower, Purple Level—Victoria Fast with a poster on Conceptualizing Volunteered Geographic Information and the Participatory Geowebin the session entitled Geographic Information Science and Technology (GIS&T) Poster Session.

- 10:40 AM in Acapulco, Hyatt, West Tower, Gold Level—Cheryl Power on Legal requirements and Best Practices for Accessing and Licensing Data & Research Results in Spatial epidemiological research in the 10:00 AM session entitled Advances in the Spatial Epidemiology of Socioeconomic Determinants, Access, and Health.

- 11:00 AM in Skyway 282, Hyatt, East Tower, Blue Level—Piotr Jankowski on Eliciting Public Participation in Local Land Use Planning through Geo-questionnairesin the 10:00 AM session entitled Perceptions and sociopolitical narratives in environmental planning II: Case studies of decision-making.

- 1:20 PM in Regency C, Hyatt, West Tower, Gold Level—Muki Haklay’s organized session entitled OpenStreetMap Studies 1.

- 1:20 PM in Dusable, Hyatt, West Tower, Silver Level—Sarah Elwood chairing the AAG’s J. Warren Nystrom Award Session I.

- 3:20 PM in Dusable, Hyatt, West Tower, Silver Level—Sarah Elwood chairing the AAG’s J. Warren Nystrom Award Session II.

- 3:20 PM in Acapulco, Hyatt, West Tower, Gold Level—Michael Goodchild delivering the closing plenary for the Symposium on International Geospatial Health Research.

- 3:40 PM in Lucerne 1, Swissôtel, Lucerne Level—Teresa Scassa on Open or Closed? Licensing Real-time GPS Data in the 3:20 PM session entitled Public Transportation, GIS, and Spatial Analysis.

- 4:00 PM in Regency C, Hyatt, West Tower, Gold Level—Peter Johnson on Challenges and Constraints to Municipal Government Adoption of OpenStreetMap in the 3:20 PM session entitled OpenStreetMap Studies 2.

- 4:20 PM in Regency C, Hyatt, West Tower, Gold Level—Muki Haklay on COST Energic – A European Network for research of VGI: the role of OSM/VGI/Citizen Science definitions in the 3:20 PM session entitled OpenStreetMap Studies 2.

- 6:00 PM in in Grand Suite 5, Hyatt, East Tower, Gold Level—Robert Feick on Exploring The Emerging “Image of the City” Using VGIin the 5:20 PM session entitled CyberGIS Symposium: Geospatial and Spatiotemporal Ontololgy and Semantics III (Ontologies of Place).

Saturday, April 25

- 8:00 AM in New Orleans, Hyatt, West Tower, Gold Level—Victoria Fast co-presenting with Heather A. Heart on Crowd mapping mental health promotion through the Thought Spot project in the 8:00 AM session entitled Mental Health Geographies.

- 5:00 PM in Michigan A, Hyatt, East Tower, Ped Path—Zorica Nedovic-Budic on Critical Cartography and Public Discourse in the 4:00 PM session entitled Geo ICT design for urban resilience.

If you have thoughts or questions about this article, get in touch with Drew Bush, Geothink’s digital journalist, at drew.bush@mail.mcgill.ca.