Geothink student Evan Hamilton recently defended his master’s thesis on Toronto data journalists’ use of open data.

By Naomi Bloch

Data journalists are some of the most active users of government open data in Canada. In his recently defended thesis, Evan Hamilton, a master’s student in the University of Toronto’s Faculty of Information, examined the role of data journalists as advocates, users, and producers of open data.

Hamilton’s thesis, titled “Open for reporting: An exploration of open data and journalism in Canada,” addressed four research questions:

- Are open data programs in Ontario municipalities developing in a way that encourages effective business and community development opportunities?

- How and why do journalists integrate open data in reporting?

- What are the major challenges journalists encounter in gaining access to government data at the municipal level?

- How does journalism shape the open data development at both the policy level and the grassroots level within a municipality?

To inform his work, Hamilton conducted in-depth, semi-structured interviews with three key data journalists in the City of Toronto: Joel Eastwood at the Toronto Star, William Wolfe-Wylie at the CBC, and Patrick Cain at Global News. While open data is often touted as a powerful tool for fostering openness and transparency, in his paper Hamilton notes that there is always the risk that “the rhetoric around open data can also be employed to claim progress in public access, when in fact government-held information is becoming less accessible.”

In an interview with Geothink, Hamilton explained that the journalists made important distinctions between the information currently available on Canadian open data portals and the information they typically seek in order to develop compelling, public-interest news stories. “One of the big things I took away from my interviews was the differentiation that journalists made between Freedom of Information and open data,” said Hamilton. “They were using them for two completely different reasons. Ideally, they would love to have all that information available on open data portals, but the reality is that the portals are just not as robust as they could be right now. And a lot of that information does exist, but unfortunately journalists have to use Freedom of Information requests to get it, which is a process that can take a lot of time and not always lead to the best end result.”

In an interview with Geothink, Hamilton explained that the journalists made important distinctions between the information currently available on Canadian open data portals and the information they typically seek in order to develop compelling, public-interest news stories. “One of the big things I took away from my interviews was the differentiation that journalists made between Freedom of Information and open data,” said Hamilton. “They were using them for two completely different reasons. Ideally, they would love to have all that information available on open data portals, but the reality is that the portals are just not as robust as they could be right now. And a lot of that information does exist, but unfortunately journalists have to use Freedom of Information requests to get it, which is a process that can take a lot of time and not always lead to the best end result.”

Legal provisions at various levels of government allow Canadians to make special Freedom of Information requests to try to access public information that is not readily available by other means. A nominal fee is usually charged. In Toronto, government agencies generally need to respond to such requests within 30 days. Even so, government responses do not always result in the provision of usable data, and if journalists request large quantities of information, departments have the right to extend the 30-day response time. For journalists, a delay of even a few days can kill a story.

While the journalists Hamilton interviewed recognized that open data portals were limited by a lack of resources, there was also a prevailing opinion that many government agencies still prefer to vet and protect the most socially relevant data. “Some were very skeptical of the political decisions being made,” Hamilton said. “Like government departments are intentionally trying to prevent access to data on community organizations or data from police departments looking at crime statistics in specific areas, and so they’re not providing it because it’s a political agenda.”

Data that helps communities

In his thesis, Hamilton states that further research is needed to better understand the motivations behind government behaviours. A more nuanced explanation involves the differing cultures within specific municipal institutions. “The ones that you would expect to do well, do do well, like the City of Toronto’s Planning and Finance departments,” Hamilton said. “Both of them provide really fantastic data that’s really up-to-date, really useful and accessible. They have people you can talk to if you have questions about the data. So those departments have done a fantastic job. It’s just having all the other departments catch up has been a larger issue.”

An issue of less concern to the journalists Hamilton consulted is privacy. The City’s open data policy stresses a balance between appropriate privacy protection mechanisms and the timely release of information of public value. Hamilton noted that in Toronto, the type of information currently shared as open data poses little risk to individuals’ privacy. At the same time, the journalists he spoke with tended to view potentially high-risk information such as crime data as information for which public interest should outweigh privacy concerns.

Two of the three journalists stressed the potential for data-driven news stories to help readers better understand and address needs in their local communities. According to Hamilton’s thesis, “a significant factor that prevents this from happening at a robust level is the lack of data about marginalized communities within the City.”

The journalists’ on-the-ground perspective echoes the scholarly literature, Hamilton found. If diverse community voices are not involved in the development of open data policies and objectives, chances for government efforts to meet community needs are hampered. Because of their relative power, journalists do recognize themselves as representing community interests. “In terms of advocacy, the journalists identify themselves as open data advocates just because they have been the ones pushing the city for the release of data, trying to get things in a usable format, and creating standard processes,” Hamilton said. “They feel they have that kind of leverage, and they act as an intermediary between a lot of groups that don’t have the ability to get to the table during negotiations and policy development. So they’re advocating for their own interests, but as they fulfill that role they’re advocating for marginalized communities, local interest groups, and people who can’t get to the table.”

Policy recommendations

Hamilton’s research also pointed to ways in which data journalists can improve their own professional practices when creating and using open data. “There needs to be more of a conversation between journalists about what data journalism is and how you can use open data,” Hamilton said. “When I talked to them, there was not a thing like, ‘Any time you use a data set in your story you cite the data set or you provide a link to it.’ There’s no standard practice for that in the industry, which is problematic, because then they’re pulling numbers out of nowhere and they’re trusting that you’ll believe it. If you’re quoting from a data set you have to show exactly where you’re getting that information, just like you wouldn’t anonymize a source needlessly.”

While Hamilton concentrated on building a picture of journalists’ open data use in the City of Toronto, his findings resulted in several policy recommendations for government agencies more broadly. First, Hamilton stressed that “as a significant user group, journalists need to be consulted in a formal setting so that open data platforms can be better designed to target their specific needs.” This is necessary, according to Hamilton, in order to permit journalists to more effectively advocate on behalf of their local communities and those who may not have a voice.

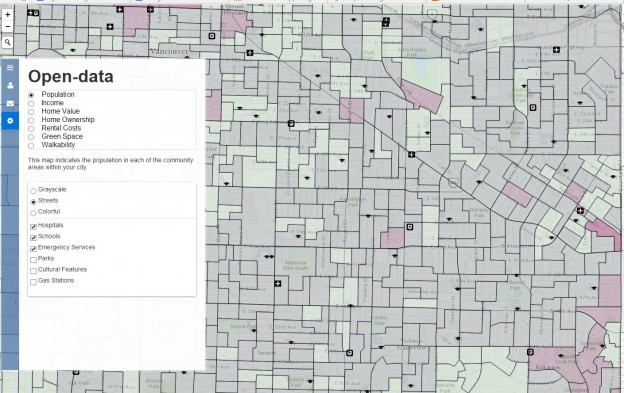

Another recommendation is aimed at meeting the needs of open data users who have different levels of competency. Although he recognizes the challenges involved, in his concluding chapter Hamilton writes, “Municipal governments need to allocate more resources to open data programs if they are going to be able to fulfill the needs of both a developer class requiring technical specifications, and a general consumer class that requires tools (for example. visualizations and interactives) to consume the data.”

Finally, Hamilton recommends that municipalities engage in more formal efforts “to combat internal culture in municipal departments that are against publishing public information. Data should be viewed as a public service, and public data should be used in the public interest.”

If you have any questions for Evan, reach him on Twitter here: @evanhams

Evan Hamilton successfully defended his Master of Information thesis on September 29 at the Faculty of Information, University of Toronto. His work was supervised by Geothink co-applicant researcher Leslie Regan Shade, associate professor in the University of Toronto’s Faculty of Information. Other committee members included University of Toronto’s Brett Caraway and Alan Galey (chair), as well as April Lindgren, an associate professor at Ryerson University’s School of Journalism and founding director of the Ryerson Journalism Research Centre, a Geothink partner organization.

Abstract

This thesis describes how open data and journalism have intersected within the Canadian context in a push for openness and transparency in government collected and produced data. Through a series of semi-structured interviews with Toronto-based data journalists, this thesis investigates how journalists use open data within the news production process, view themselves as open data advocates within the larger open data movement, and use data-driven journalism in an attempt to increase digital literacy and civic engagement within local communities. It will evaluate the challenges that journalists face in gathering government data through open data programs, and highlight the potential social and political pitfalls for the open data movement within Canada. The thesis concludes with policy recommendations to increase access to government held information and to promote the role of data journalism in a civic building capacity.

Reference: Hamilton, Evan. (2015). Open for reporting: An exploration of open data and journalism in Canada (MI thesis). University of Toronto.

If you have thoughts or questions about this article, get in touch with Naomi Bloch, Geothink’s digital journalist, at naomi.bloch2@gmail.com.