Geothink’s 2016 Summer Institute took place the second week of May at Ryerson University in Toronto with 35 students in attendance.

By Drew Bush

We’re very excited to present you with our 11th episode of Geothoughts. You can also subscribe to this Podcast by finding it on iTunes.

In this episode, we take a look at the just concluded 2016 Geothink Summer Institute. Students at this year’s institute learned difficult lessons about applying actual open data to civic problems through group work and interactions with Toronto city officials, local organizations, and Geothink faculty. The last day of the institute culminated in a writing-skill incubator that gave participants the chance to practice communicating even the driest details of work with open data in a manner that grabs the attention of the public.

Held annually as part of a five-year Canadian Social Sciences and Humanities Research Council (SSHRC) partnership grant, each year the Summer Institute devotes three days of hands-on learning to topics important to research taking place in the grant. This year, each day of the institute alternated lectures and panel discussions with work sessions where instructors mentored groups one-on-one about the many aspects of open data.

Thanks for tuning in. And we hope you subscribe with us at Geothoughts on iTunes. A transcript of this original audio podcast follows.

TRANSCRIPT OF AUDIO PODCAST

Welcome to Geothoughts. I’m Drew Bush.

[Geothink.ca theme music]

The 2016 Geothink Summer Institute wrapped up during the second week of May at Ryerson University in Toronto. Held annually as part of a five-year Canadian Social Sciences and Humanities Research Council (SSHRC) partnership grant, each year the Summer Institute devotes three days of hands-on learning to topics important to research taking place in the grant.

The 35 students at this year’s institute learned difficult lessons about applying actual open data to civic problems through group work and interactions with Toronto city officials, local organizations, and Geothink faculty. The last day of the institute culminated in a writing-skill incubator that gave participants the chance to practice communicating even the driest details of work with open data in a manner that grabs the attention of the public.

On day one, students confronted the challenge of working with municipal open data sets to craft new applications that could benefit cities and their citizens. The day focused on an Open Data Iron Chef that takes it name from the popular television show of the same name. Geothink.ca spoke to the convener of the Open Data Iron Chef while students were still hard at work on their apps for the competition.

“Richard Pietro, OGT Productions and we try to socialize open government and open data.”

“You have such a variety of skill sets in the room, experience levels, ages, genders, ethnicities. I think it’s one of the most mixed sort of Open Data Iron Chefs that I’ve ever done. So I’m just excited to see the potential just based on that.”

“But I think they’re off to a great start. They’re definitely, you know, eager. That was clear from the onset. As soon as we said “Go,” everybody got into their teams. And it’s as though the conversation was like—as though they’ve been having this conversation for years.”

For many students, the experience was a memorable one. Groups found the competition interesting as they worked to conceptualize an application for most of the afternoon before presenting it the institute as a whole.

“More in general, just about the sort of the challenge we have today: It’s kind of interesting coming from like an academic sort of standpoint, especially in my master of arts, there is a lot of theory around like the potential benefits of open data. So it’s kind of nice to actually be working on something that could potentially have real implications, you know?”

That’s Mark Gill, a student in attendance from the University of British Columbia, Okanagan. His group worked with open data from the Association of Bay Area Governments Resilience Program to better inform neighborhoods about their level of vulnerability to natural hazards such as earthquakes, floods, or storms. The application they later conceptualized allowed users to measure their general neighborhood vulnerability. Specific users could also enter their socioeconomic data to gain their own individual vulnerability.

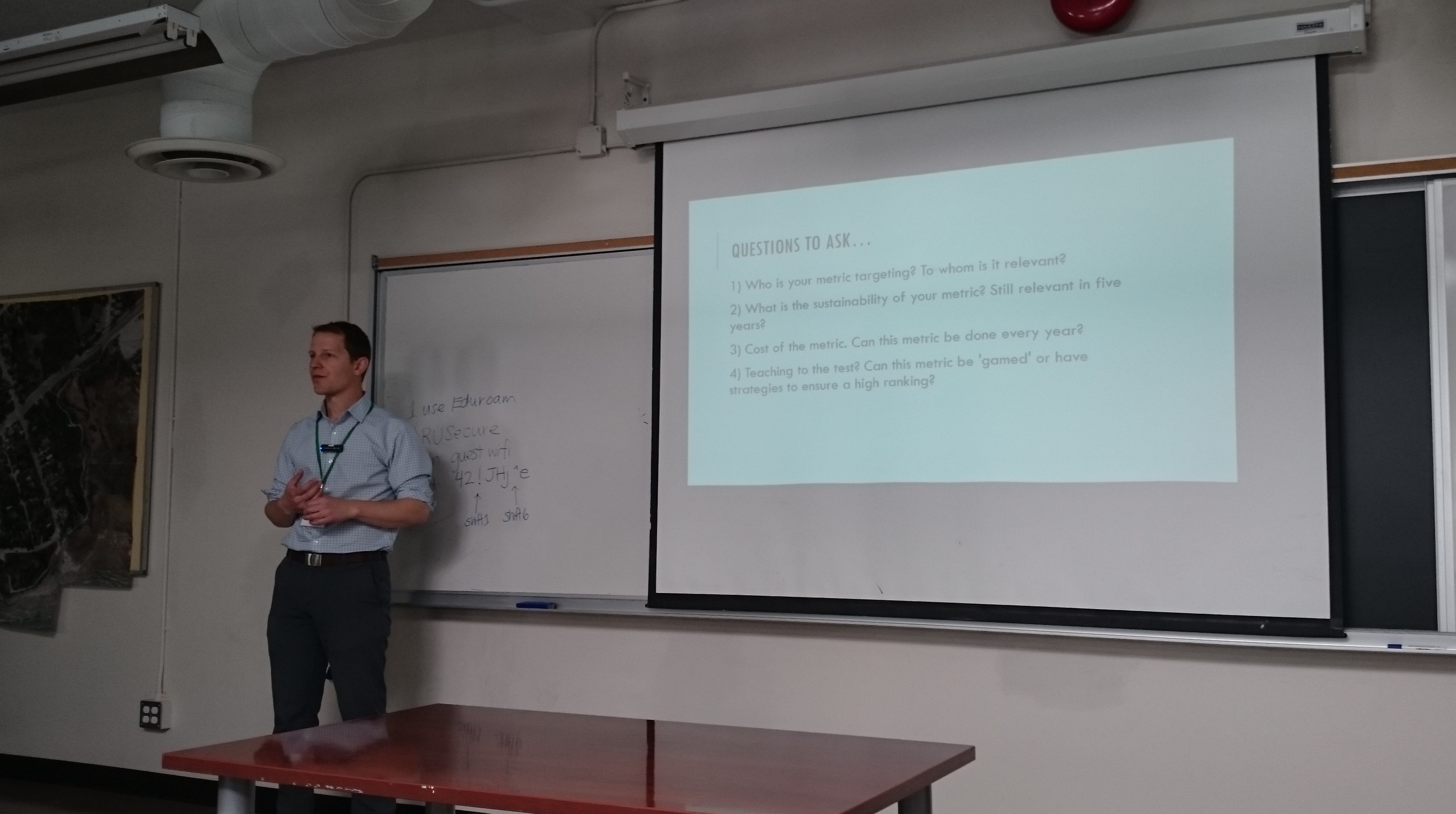

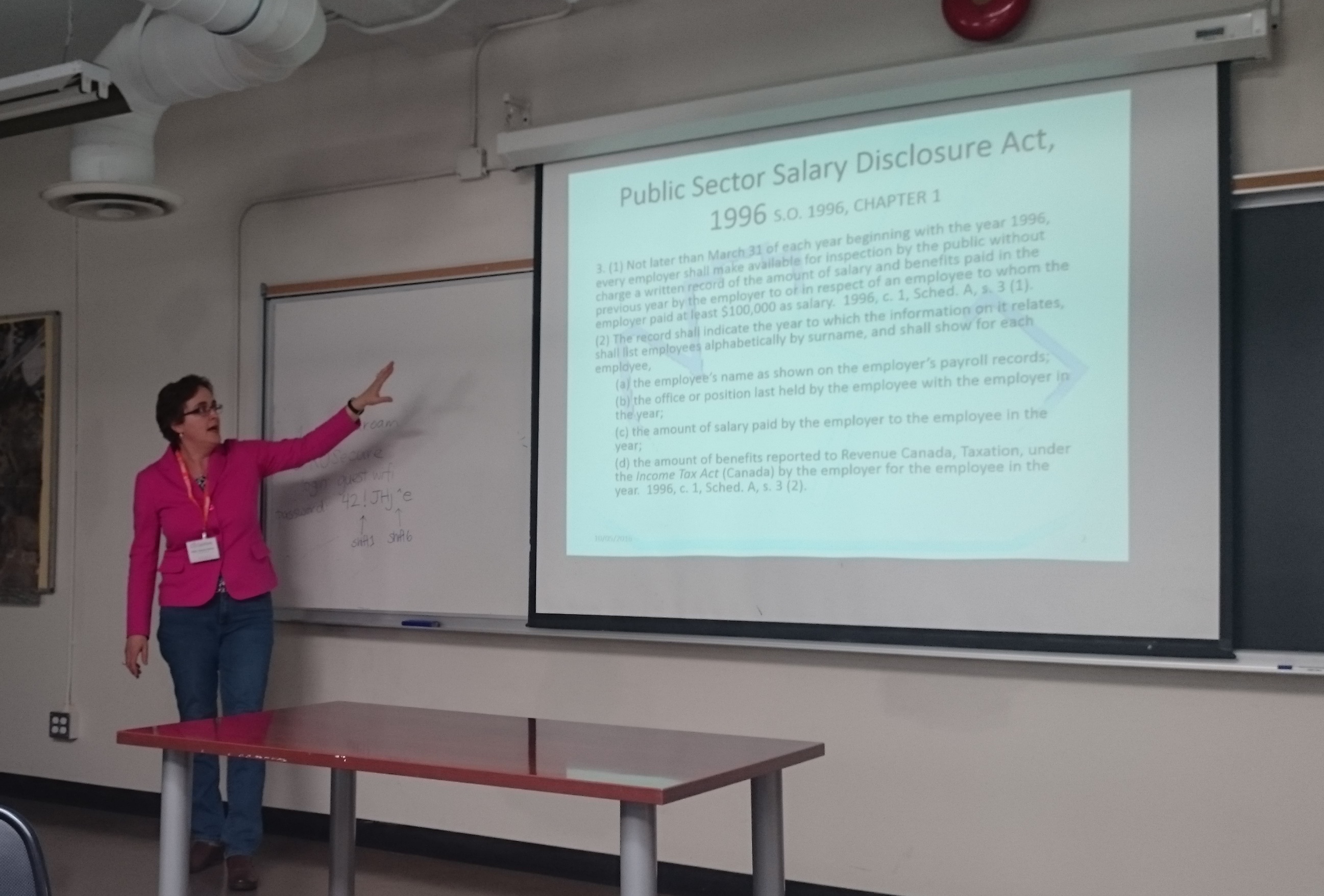

On day two, students heard from four members of Geothink’s faculty on their unique disciplinary perspectives on how to value open data. Here we catch up with Geothink Head Renee Sieber, an associate professor in McGill University’s Department of Geography and School of Environment, as she provided students a summary of methods for evaluating open data. Sieber started her talk by detailing many of the common quantitative metrics used including the counting of applications generated at a hackathon, the number of citizens engaged, or the economic output from a particular dataset.

“There’s a huge leap to where you start to think about how do you quantify the improvement of citizen participation? How do you quantify the increased democracy or the increased accountability that you might have. So you can certainly assign a metric to it. But how do you actually attach a value to that metric? So, I basically have a series of questions around open data valuation. I don’t have a lot of answers at this point. But they’re the sort of questions that I’d like you to consider.”

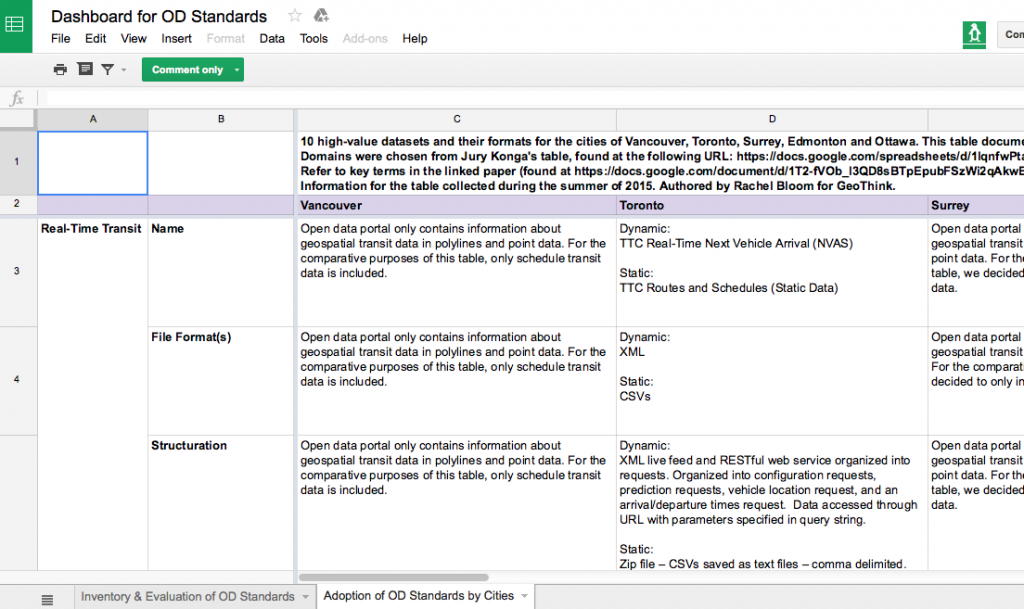

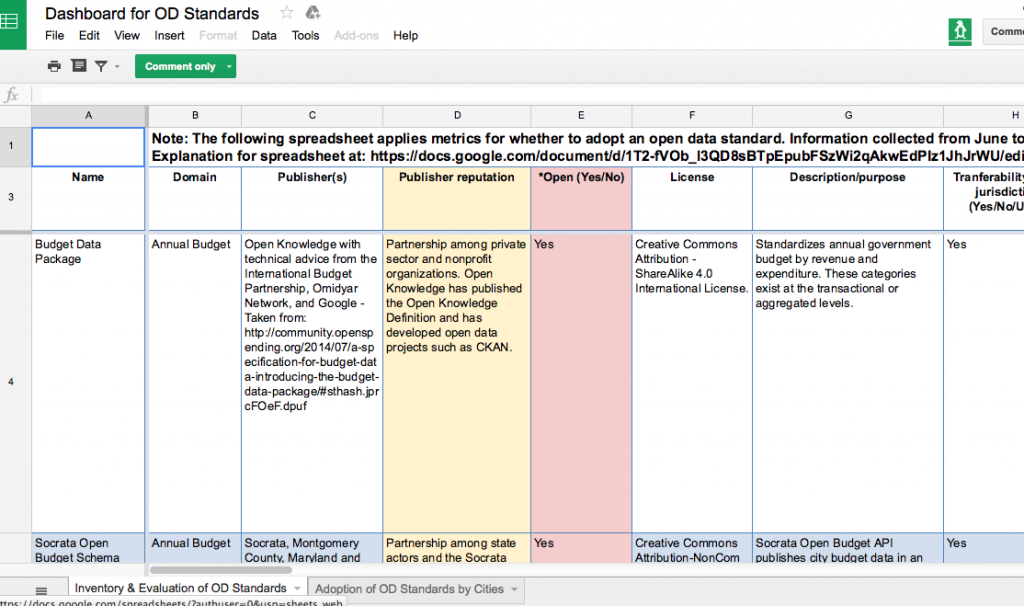

After hearing from the four faculty members, students spent the rest of the day working in groups to first create measures to value open data, and, second, role-play how differing sectors might use a specific type of data. In between activities on day two, students also heard from a panel of municipal officials and representatives of Toronto-based organizations working with open data. On day three, students transitioned to taking part in a writing-skills incubator workshop run by Ryerson University School of Journalism associate professors Ann Rahaula and April Lindgren. Students were able to learn from the extensive experience both professors have had in the journalism profession.

“I’m going to actually talk a little about, more broadly, about getting your message out in different ways, including and culminating with the idea of writing a piece of opinion. And, you know, today’s going to be mostly about writing and structuring an Op-ed piece. But I thought I want to spend a few minutes talking about the mechanics of getting your message out—some sort of practical things you can do. And of course this is increasingly important for all the reasons that Ann was talking about and also because the research granting institutions are putting such an emphasis on research dissemination. In other words, getting the results of your work out to organizations and the people who can use it.”

For most of her talk, Lindgren focused on three specific strategies.

“So, one is becoming recognized as an expert and being interviewed by the news media about your area of expertise. The second is about using Twitter to disseminate your work. And the third is how to get your Op-ed or your opinion writing published in the mainstream news media whether it’s a newspaper, an online site, or even if you’re writing for your own blog or the research project, or the blog of the research project that you’re working on.”

Both Lindgren and Rahaula emphasized how important it is for academics to share their work to make a difference and enrich the public debate. Such a theme is central to Geothink, which emphasizes partnerships between researchers and actual practitioners in government, private, and non-profit sectors. Such collaboration makes possible unique research that has direct impacts on civil society.

At the institute, this focus was illustrated by an invitation Geothink extended to Civic Tech Toronto for a hackathon merging the group’s members with Geothink’s students. Taking place on the evening of day two, the hack night featured a talk by Sieber and hands-on work on the issues Toronto citizens find most important to address in their city. Much like the institute itself, the night gave students a chance to apply their skills and knowledge to real applications in the city they were visiting.

[Geothink.ca theme music]

[Voice over: Geothoughts are brought to you by Geothink.ca and generous funding from Canada’s Social Sciences and Humanities Research Council.]

###

If you have thoughts or questions about this podcast, get in touch with Drew Bush, Geothink’s digital journalist, at drew.bush@mail.mcgill.ca.