This post is cross-posted with permission from April Lindgren and Christina Wong at Local News Research Project.

By April Lindgren and Christina Wong

Introduction

Concerns about how neighbourhoods are portrayed in the news have surfaced regularly in the Toronto area over the years. But are those concerns valid?

Interactive maps produced by the The Local News Research Project (LNRP) at Ryerson University’s School of Journalism are designed to help Toronto residents answer this question. The maps give the public access to data the research project collected on local news coverage by the Toronto Star and the online news website OpenFile.ca. The maps can be used by members of the public and researchers to:

- get an overall sense of where news in the city is – and isn’t – covered

- compare patterns of local news coverage by two different news organizations

- examine the city-wide geographic patterns of reporting on crime, entertainment and other major news topics

- examine news coverage in each of Toronto’s 44 wards including how often the news stories and photographs reference locations in a ward

- see what story topics are covered in each ward

The maps are based on the Toronto Star’s local news coverage published on 21 days between January and August, 2011. Researchers have found that a two-week sample of news is generally representative of news coverage over the course of a year (Riffe, Aust & Lacy, 1993). The data for OpenFile.ca, which suspended publishing in 2012, were collected for every day in 2011 between January and August.

Click here to see the maps or continue reading to find out more about news coverage and neighbourhood stereotyping, how the maps work, and the role of open data sources in this project.

Local news and neighbourhood stereotyping

The decision to explore news coverage of Toronto neighbourhoods was prompted by concerns expressed by citizens and local politicians about how certain parts of the city are portrayed in the local media. Residents were furious (Pellettier, Brawley & Yuen, 2013), for instance, when Toronto Star columnist Rosie Dimanno referred to the city’s Scarborough area as “Scarberia” in an article about former mayor Rob Ford’s re-election campaign (DiManno, 2013). Back in 2007, then-mayor David Miller went so far as to contact all of the city’s news media asking them to cite the nearest main intersection rather than reporting more generally that a particular crime occurred in Scarborough (Maloney, 2007). In Toronto’s west end, the local city councillor suggested negative connotations associated with the Jane and Finch neighbourhood could be diffused by renaming it University Heights, but the idea was vehemently rejected by residents (Aveling, 2009).

A study that investigated how Toronto’s most disadvantaged neighbourhoods were covered by the Toronto Star concluded that there was very little coverage of news in these communities (Lindgren, 2009). The study, which examined Toronto Star local news reporting in 2008, also found that crime tended to dominate the limited coverage that did take place and suggested the problem could be rectified not by ignoring crime stories, but by increasing coverage of other sorts of issues in those communities.

Exploring the maps

The interactive maps allow users to explore local news coverage in the City of Toronto. A sample of local stories and photographs from the Toronto Star (the local newspaper with the largest circulation in the city) and OpenFile.ca (a community-based news website) were identified and analyzed in 2011 to capture data about story topics and mentions of geographic locations.

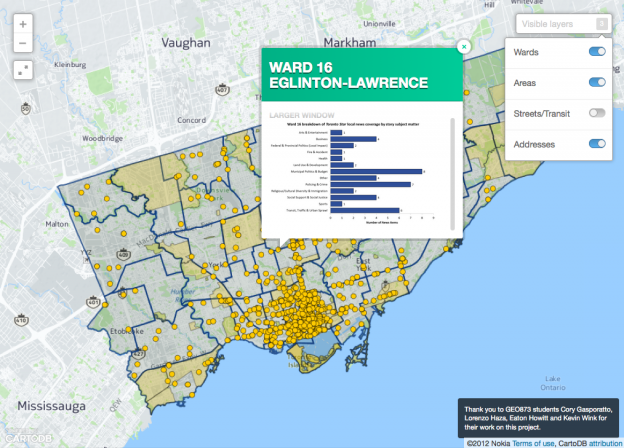

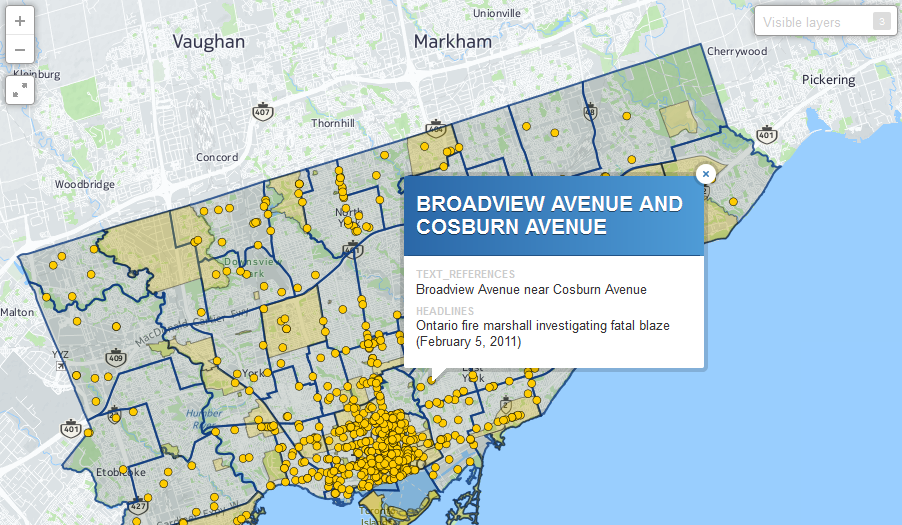

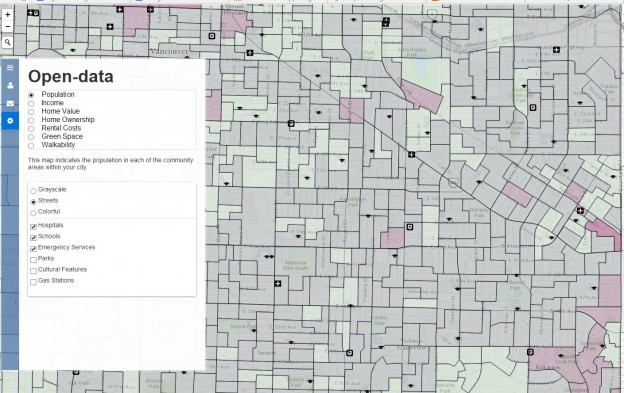

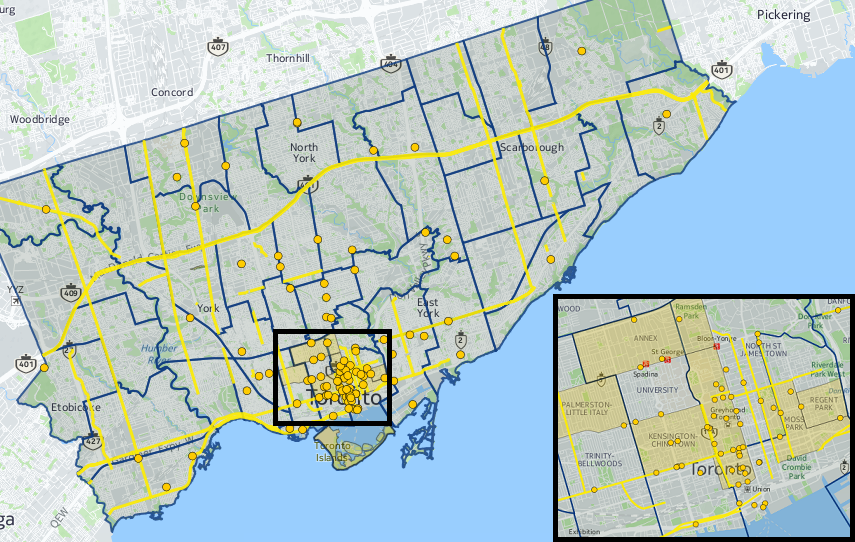

These maps make the data available to the public in a way that allows users to explore and compare media coverage in different areas of the city. Users can zoom in on a neighbourhood and discover all of the locations referenced within a neighbourhood. Each point on the map represents a location that was referenced in one or more news items. Users can click on any of these points to see a list of news articles associated with each location (Figure 1).

- Figure 1. Users can click each point to find out about the news articles that referenced the location

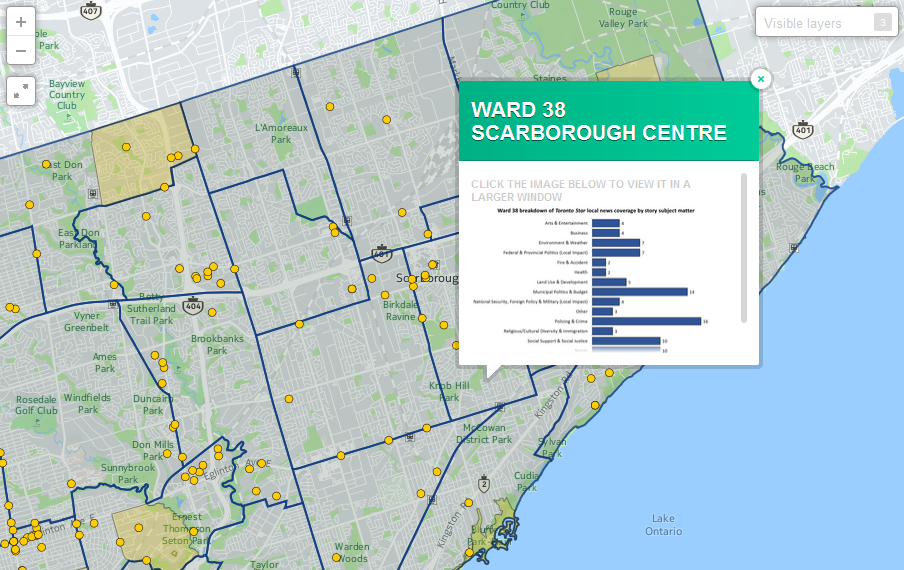

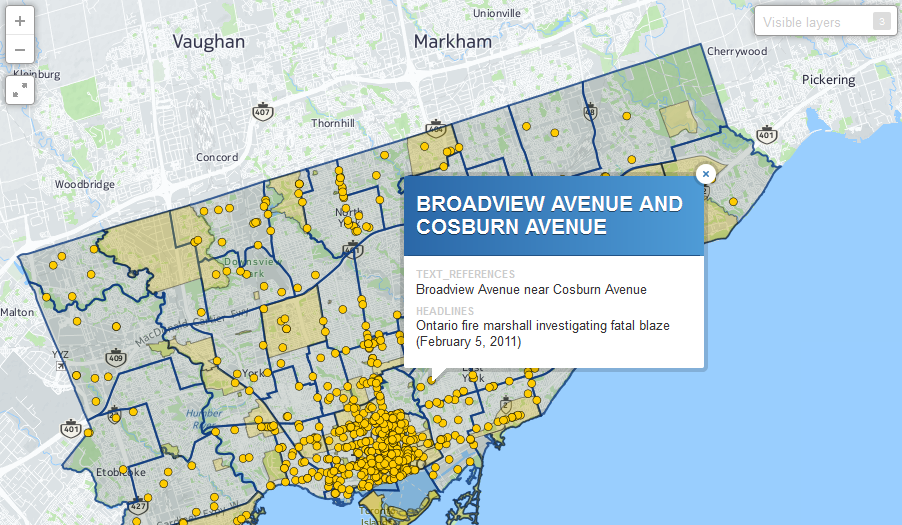

By clicking within a ward boundary, users can also access a summary chart describing the breakdown by subject of all local news coverage in that ward. Users interested in the Scarborough area, for instance, can zoom into that area on the map and click on each Scarborough ward to see what sorts of stories (crime, transit, entertainment, sports, etc.) were reported on in that ward (Figure 2).

- Figure 2. Users can click within a ward to access charts summarizing news coverage by topic

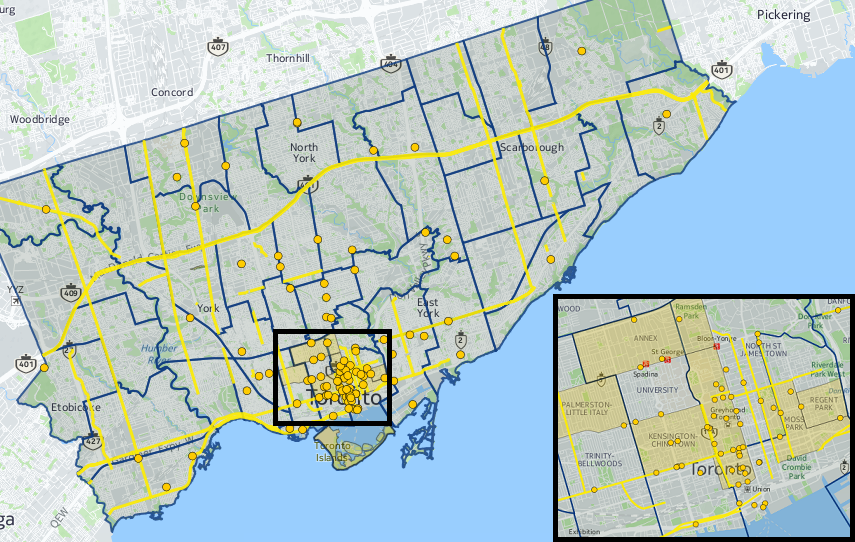

Users interested in how and where a particular news topic is covered can access separate interactive maps for the top five subjects covered by the two news sources. Figure 3, for example, shows all locations mentioned in crime and policing stories published by the Toronto Star during the study’s sample period.

- Figure 3. Toronto Star coverage of crime and policing news

The role of open data sources in creating these maps

A total of 23 pre-existing datasets were used to support the creation of these interactive maps including relevant open datasets that were publically available online in 2008. The datasets were used to populate a list of geographic locations in the GTA that had the potential to be referenced in local news stories. Each dataset was assigned unique numerical codes and all 23 datasets were appended to a geographic reference table that coders could search. The incorporated reference list of geographic locations and features allowed for a more accurate and efficient coding process: Coders entering information about spatial references in local news items were able to select many of the referenced geographic locations from the pre-populated list rather than entering the information manually. This improved accuracy because it helped prevent human error and also sped up the coding process.

We would have preferred to use more open data sources during the initial development of the database, but this wasn’t possible due to limited availability of datasets with the spatial attributes that make mapping possible. At that time, only two of the 23 datasets used (approximately 8.7% of the total) were available from open data sources in a format that included geography (such as shapefiles). Both files were obtained from the City of Toronto’s Open Data website. These limitations meant that the majority of the database relied on contributions from private data sources.

The situation has improved over time as more open government data become available in geographic file formats that support research with spatial analysis. As of mid-2015, six more of the 23 datasets (two federal, one provincial and three municipal) used in the database have become available. If we were creating the database today, a total of eight datasets or 34.8% of the initial database could be populated using open data sources (Table 1).

| Table 1. Availability of open data sources |

|

Available in 2008 when the database was created |

Currently available |

| Private sources |

21 |

15 |

| Government open data |

2 (8.7% of database) |

8 (34.8% of database) |

| Total # of datasets |

23 |

23 |

Since 2008, the Government of Canada has launched its own open data portal, joined the Open Government Partnership alongside other countries supporting the release of open government data, and adopted the G8 Open Data Charter (Standing Committee on Government Operations and Estimates, 2014). Provincial and municipal governments have made similar improvements to open data access. The Government of Ontario launched an online open data catalogue in 2012 and is currently developing an Open Data Directive to be implemented later this year (Fraser, 2015). The City of Toronto introduced its open data portal in 2009 and developed an Open Data Policy in 2012 (City of Toronto, n.d.).

As Table 1 suggests, however, further improvements are required to reduce barriers to research and innovation. A report from the Standing Committee on Government Operations and Estimates, for instance, recommended that the federal government provide data at smaller levels of geography, work together with different levels of government to establish standards and release data, and provide a greater variety of open data to reflect all government departments. The report noted that the release of open data can improve government efficiency, foster citizen engagement, and encourage innovation (Standing Committee on Government Operations and Estimates, 2014). Academic researchers have argued that improvements in the availability of open government data would stimulate valuable research and outcomes with economic and social value (Jetzek, Avital & Bjorn-Andersen, 2014; Kucera, 2015; Zuiderwijk, Janssen & Davis, 2014). Journalists are also pushing for easier and greater access to data (Schoenhoff & Tribe, 2014).

Conclusion

Research conducted by the Local News Research Project was made possible by public funds and as such the data should be widely available. The interactive maps are an attempt to fulfill that obligation.

While the maps capture only a snapshot of news coverage at a fixed point in time, they nonetheless demonstrate the importance of geospatial analysis in local news research (Lindgren & Wong, 2012). They are also a powerful data visualization tool that allows members of the public to independently explore media portrayals of neighbourhoods and the extent to which some parts of a city are represented in the news while others are largely ignored.

Finally, this mapping project also illustrates how open government data can foster research and how much there is still to do in terms of making data available to the public in useful formats.

The Local News Research Project was established in 2007 to explore the role of local news in communities. Funding for this research has been provided by Ryerson University, CERIS-The Ontario Metropolis Centre and the Social Sciences and Humanities Research Council.

About the authors: Lindgren is an Associate Professor in Ryerson University’s School of Journalism and Academic Director of the Ryerson Journalism Research Centre. Christina Wong is a graduate of Ryerson University’s Geographic Analysis program. Initial work on the maps was done in 2014 by GEO873 students Cory Gasporatto, Lorenzo Haza, Eaton Howitt and Kevin Wink from Ryerson University’s Geographic Analysis program.

References

Avaling, N. (2009, January 8). Area now being called University Heights, but some call change a rejection of how far we’ve come. Toronto Star, p. A10.

City of Toronto. (n.d.). Open Data Policy. Retrieved from http://www1.toronto.ca/wps/portal/contentonly?vgnextoid=7e27e03bb8d1e310VgnVCM10000071d60f89RCRD

DiManno, R. (2013, July 6). Ford fest makes a strategic move. Toronto Star, p. A2.

Jetzek, T., Avital, M. & Bjorn-Andersen, N. (2014). Data-driven innovation through open government data. Journal of Theoretical and Applied Electronic Commerce Research, 9(2), 100-120.

Fraser, D. (2015, May 1). Ontario announces more open data, public input. St. Catharines Standard. Retrieved from http://www.stcatharinesstandard.ca/2015/05/01/ontario-announces-more-open-data-public-input

Kucera, J. (2015). Open government data publication methodology. Journal of Systems Integration, 6(2), 52-61.

Lindgren, A. (2009). News, geography and disadvantage: Mapping newspaper coverage of high-needs neighbourhoods in Toronto, Canada. Canadian Journal of Urban Research, 18(1), 74-97.

Lindgren, A. & Wong, C. (2012). Want to understand local news? Make a map. 2012 Journalism Interest Group proceedings. Paper presented at Congress 2012 of the Humanities and Social Sciences conference. Retrieved from http://cca.kingsjournalism.com/?p=169

Maloney, P. (2007, January 16). Mayor sticks up for Scarborough. Toronto Star. Retrieved from http://www.thestar.com/news/2007/01/16/mayor_sticks_up_for_scarborough.html?referrer=

Pellettier, A., Brawley, D. & Yuen, S. (2013, July 11). Don’t call us Scarberia [Letter to the editor]. Toronto Star. Retrieved from http://www.thestar.com/opinion/letters_to_the_editors/2013/07/11/dont_call_us_scarberia.html

Riffe, D., Aust, C. F. & Lacy, S. R. (1993). The effectiveness of random, consecutive day and constructed week sampling. Journalism Quarterly, 70, 133-139.

Schoenhoff, S. & Tribe, L. (2014). Canada continues to struggle in Newspapers Canada’s annual FOI audit [web log post]. Retrieved from https://cjfe.org/blog/canada-continues-struggle-newspapers-canada%E2%80%99s-annual-foi-audit

Standing Committee on Government Operations and Estimates. (2014). Open data: The way of the future: Report of the Standing Committee on Government Operations and Estimates. Retrieved from http://www.parl.gc.ca/content/hoc/Committee/412/OGGO/Reports/RP6670517/oggorp05/oggorp05-e.pdf

Zuiderwijk, A., Janssen, M. & Davis, C. (2014). Innovation with open data: Essential elements of open data ecosystems. Information Polity, 19(1, 2), 17-33.